- Testing Basics

- Home

- /

- Learning Hub

- /

- ETL Testing Tutorial

- -

- July 17 2023

What Is ETL Testing: Basics and How to Get Started

Learn the importance of ETL testing in ensuring accurate data integration. Step-by-step tutorial for verifying data accuracy in the ETL process.

OVERVIEW

ETL testing is a process aimed at ensuring the accuracy and reliability of data that has been extracted from its source, transformed according to business rules and requirements, and subsequently loaded into the target destination. It involves comprehensive verification and validation of the data at various stages throughout the ETL process.

The world has become data-driven, where organizations largely depend on accurate and subjective data. These data are gathered from multiple sources for timely extraction, transformation, and loading (ETL) into a data warehouse or analytic platform. It is important that data is transformed into a usable and consistent format for better analysis and decision-making. The ETL process can better ensure this transformation of data.

However, data processing and transformation are also prone to error. How can one place trust in data for making transformative decisions when there exists a possibility of data loss, incompleteness, or irrelevance to the specific business context?

Here comes ETL testing into play. This testing approach validates and verifies the reliability and data quality that has been proceeded from different sources. You can identify anomalies, data discrepancies, and transformation errors usually experienced during the extraction, transformation, and loading phase. In other words, ETL testing is performed when the complete ETL process requires to be validated to prevent data redundancy and data loss.

Understanding ETL

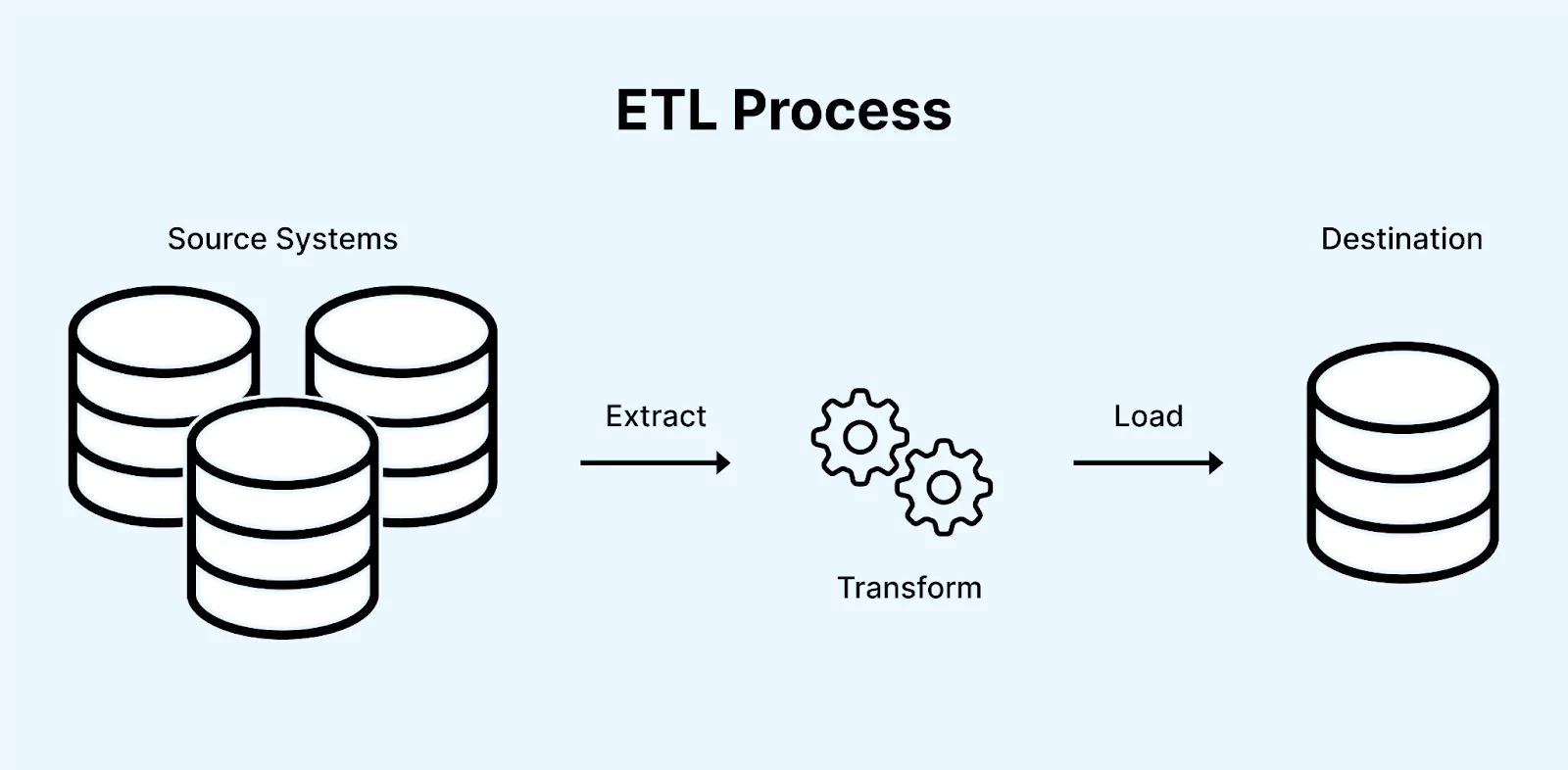

When discussing data processing, we usually refer to a combination of processes rather than a single process. One such pipeline within that combination is called ETL. This term ETL stands for Extract, Transforms, and Load.

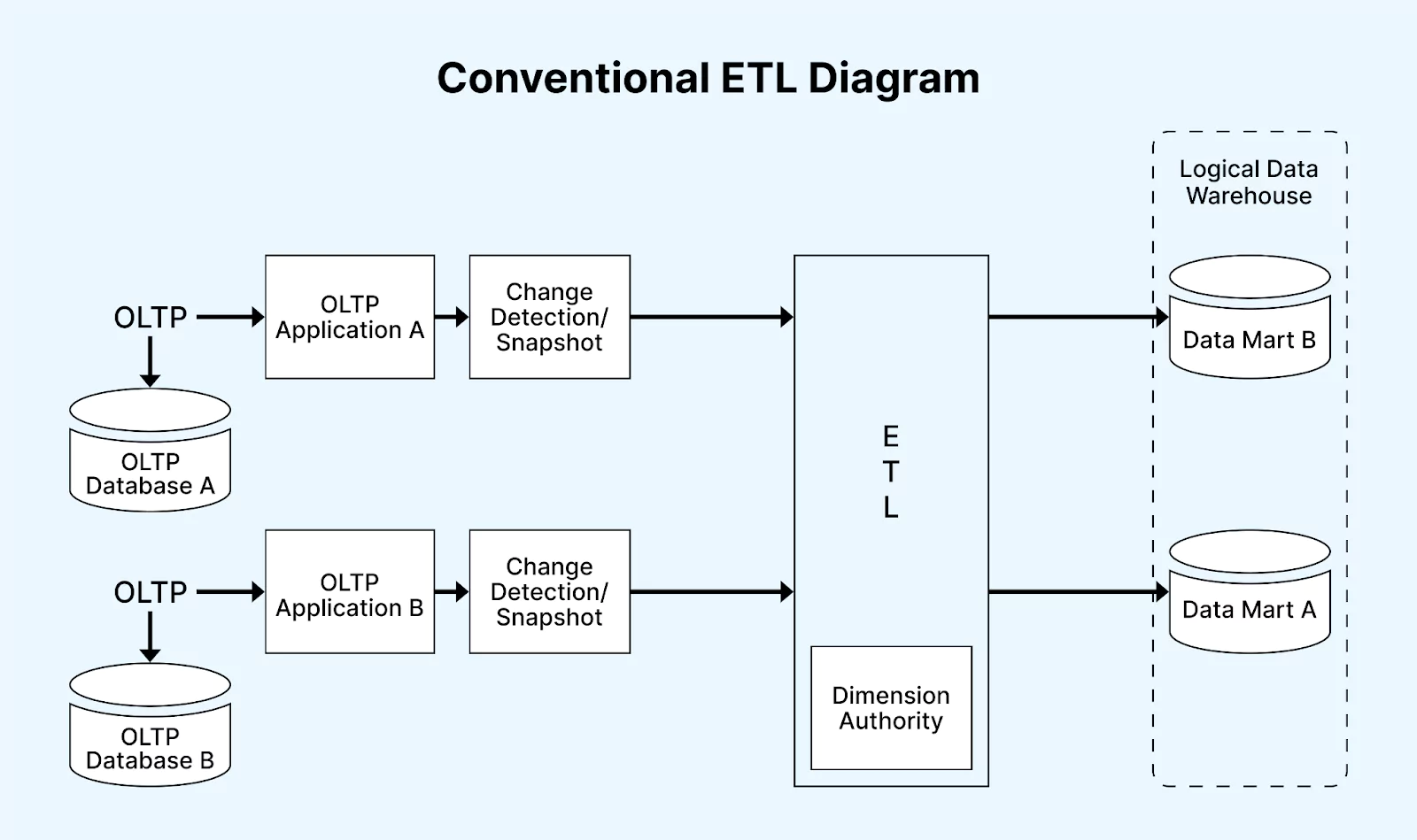

It is a process of loading data from the source system to the data warehouse. There are different sources from which data is extracted, like the OLTP (Online Transaction Processing) database. It is then transformed so that it matches the data warehouse schema. Followed to this involves loading the data into the warehouse database.

However, it is important to note that the data incorporated into the data warehouse may be from non-OLTP systems like legacy systems, spreadsheets, and text files.

Let's understand how it works:

For instance, consider a retail store with different departments like sales, marketing, and logistics. Each department independently handles customer information and stores the data in different ways. The sales department may store it by customer name, while the marketing department may use customer IDs.

Now, if they want to examine the customer's history and understand the products purchased through different marketing campaigns, it would be a cumbersome task. The solution lies in utilizing a data warehouse, which stores information from various sources in a standardized structure using ETL. It enables the transformation of dissimilar datasets into a unified structure. Subsequently, Business Intelligence (BI) tools can be employed to extract meaningful insights and generate reports from this consolidated data.

Note : Automate your web and mobile applications on real devices. Try LambdaTest Now!

ETL Process

In a corporate setting, crucial decisions rely on employees' reports on diverse projects. These reports predominantly consist of data, underscoring the significance of maintaining a well-managed data warehouse. The Extract, Transform, Load (ETL) process is a vital conduit, establishing connections between different project databases to integrate data effectively.

ETL process facilitates communication between these databases, enabling extraction of information from multiple source databases, transformation into a standardized format, and delivery to the desired destination database.

Testing the ETL process differs from traditional database testing in several ways. Database testing typically involves examining smaller volumes of information from databases of similar types. In contrast, data warehouse testing requires a comprehensive data warehouse, incorporating data from diverse sources of varying types.

While regular databases are employed in database testing, deflated databases are utilized for data warehouse testing. Decisions and work culture must align with a well-defined strategy to ensure smooth organizational functioning. Management is crucial in ensuring the correct execution of all processes, accompanied by appropriate validation. Consequently, conducting quality checks on the ETL process becomes imperative to uphold an adequately validated data warehouse.

Let’s take an in-depth look at the individual components of the ETL process.

Components of the ETL Process

The ETL process involves three crucial components, as explained below:

Extract

The first component of the ETL process is the extraction of data, usually done from homogenous or heterogenous sources. Here, the term homogeneous and heterogeneous highlight that there can be data of a similar type or vary based on the source it originates from. For example, extracting data from an IoT device's sensor may not be similar to one generated from a web application.

The sources from where you can extract data include the following:

- Customer Relationship Management: It includes Zoho, HubSpot, etc.

- Enterprise Resource Planning: It includes Microsoft Dynamic, Oracle Ebusiness Suite, and others.

- Line of Business: It includes accounting, supply chain management, and resource planning applications.

The extracted data can be present in two different formats, which include structured formats like CSV, XML, Excel, etc.) and unstructured formats like Email, Screen Scrapping data, etc.). Other formats include JSON, Flat files, IMS (Information Management System), and Virtual Storage Access Method (VSAM).

In the ETL process, having a robust extract method is crucial because it is the first stage that interacts with raw data. Here are three main types of extract methods:

- Partial extraction with update notification: It is the most performance-effective method of data extraction accompanied by update notification. This means that you get the notification from the system of the source location by another system.

- Partial extraction without update notification: Some systems cannot receive an update notification for initiating the data extraction process. However, when you request, they can give updated records. To address such a scenario, running and scheduling the extraction method at pre-decided intervals from external sources is crucial. With this, you can get information about any changes in the data by seeking the updated records without relying on notifications from the system itself.

- Full extraction: When the two types of systems are unavailable, we are left with a system that neither provides update notifications nor indicates changes when queried. In such cases, we perform a full extraction, which involves retrieving all the data. We then analyze the changes by comparing the extracted data with the previous extraction file. Forwarding complete data, which includes duplicates, would reduce efficiency. This approach is the least efficient.

Transform

The next crucial component of the ETL process is transformation, where we apply different rules and functions for creating data in a single format from the extracted data. Some of those include data validation rules, data conversion functions, data cleaning rules, and data aggregation functions. Transformation is performed to convert multiple sources and formats of data into a single system format. Let us understand this in simple terms.

The extracted data from the source system are generally raw, and we cannot use it directly in that form. This is because it may have some flaws or errors, like duplicate data, that may result in a change in the analytics side of the business. Here, transformation applies certain techniques like data validation, data cleansing, data formatting, data aggregation, data mapping, and data integration for cleaning data and making it in the data warehouse accepted form.

During transformation, the raw data undergoes the following sub-processes:

- Cleaning: This includes removing or deleting errors or inconsistencies in data to improve data quality, for example, fixing irrelevant information and removing any duplicate data.

- Filtering: Here, you select appropriate rows and columns based on particular criteria and conditions. Some of those criteria include date and time range, numeric conditions, boolean conditions, text matching, and categorical values. This process helps you extract those data which meet specific criteria.

- Joining: This process involves interlinking relevant data from multiple different sources.

- Sorting: Here, you sort the data in the desired order based on different columns, generally done in ascending or descending for effective data analysis.

- Splitting: It is the process of dividing a single data field into different columns and attributes. You can use this process when a single field contains multiple values.

- Deduplication: In this process, the duplicated data or records are identified and removed from the database. This helps prevent redundant information that may affect data processing and analysis.

- Summarization: To get a condensed view, the data is summarized. For example, calculating total profit for a year is a summarization of data which simplifies the analysis and reporting.

- Data validation: It involves checking the data against the pre-defined rule and criteria to find any missing data or those data that do not align with the specified requirement.

- Derivation: Here, you apply the business rules and check for validity. If you find any incorrect data, you can return it to the source.

Load

The last component of ETL is a load that mainly depends on the business requirement and data usage. Some examples of requirements are as follows:

- Data is used for analysis purposes.

- Data is employed to provide search results.

- Data is leveraged for machine learning algorithms.

- Data is utilized in real-time applications.

Based on the purpose of the use of data, it is loaded to the data warehouse based on the preferred format of the business. For example, it could be batch load or full load, depending on the need of the business. However, it is worth noting that the loading of data required a loss of time. Hence to overcome this and optimize the system, it is divided into three different categories:

- Initial loading: It involves the first-time loading of the data into the data warehouse. However, this involves transferring a huge amount of data; it requires more time.

- Incremental loading: It involves loading new data that needs to be added to the database. This helps save lots of time in loading and ensures an efficient loading process.

- Refresh: It involves loading complete data and replacing it with previous data in the warehouse. However, this method involves a huge investment of time, and considering the risk of duplicated data, it must be done only when required.

ETL vs. ELT: Key Differences

As we know about the ETL process and testing, another similar concept to ETL is ELT which stands for Extraction, Loading, and Transformation. It is also based on data extraction, cleaning, and processing. However, the last component or phase of the ETL process is switched. This means that in the ELT process, we first load data and then transform it into the data warehouse.

The ELT and ETL process is often compared to each other due to their similar concepts and principles in the Big Data domain. Hence, we cannot guarantee that any process is more accurate than others. It just depends on your organization and its requirements. However, it is important to know their differences to make the best decision for their implementation in data processing for any particular organization.

In the ELT process, the data transformation is the most time-consuming activity, like joining, aggregating, filtering, and cleaning. A complex and high-volume data scenario could significantly impact the time involved in the transformation process. To ensure the working of the loaded data into the system or data warehouse for better analysis, without worrying about transformation, it is advised to load the data first, followed by transformation. You may think, what is the use of switching the steps?

When you load the data first, it is possible to work on raw and unstructured data, which gives better insight into its analysis. Further, this gives quick access to the data and allows you to perform initial analysis or make critical decisions based on the raw data.

In ELT, data loading is done in a data warehouse, and the transformation itself is executed in the warehouse. However, it is not the case with ETL. Transformation of data is performed on different servers, which includes different complexity. This is what makes ELT a faster pipeline compared to ETL. However, it is essential to note that the decision to load data first and transform it later should be based on specific requirements and the nature of the analysis being performed.

What is ETL Testing?

ETL testing is the process of validating the complete extraction, proper transfer, and accurate formatting of data from source systems into the new system. This process holds significant importance in testing data warehouses. In other words, ETL testing is performed to ensure the accuracy of the loaded data into the data warehouse system upon completion of the data transformation process.

By identifying and preventing data quality issues like duplicate data or data loss, ETL testing plays a pivotal role in conserving valuable time and resources in the long term. Moreover, it guarantees smooth and efficient integration and migration processes, eliminating potential bottlenecks that could hinder the performance of both the source and destination systems.

The ETL testing process ensures data transfers adhere to transformation rules and comply with validity checks. It differs from data reconciliation used in database testing as ETL testing is applied explicitly to data warehouse systems. Its purpose is to gather relevant information for analytics and business intelligence purposes. However, during ETL testing, specific objections encountered need to be addressed. Some of those are as follows:

- Imperfections in commercial development.

- Mismatched and duplicated information.

- Challenges in constructing test data.

- Testers lack the authority to conduct ETL tests themselves.

- The immense quantity and complexity of the data involved.

- Absence of a comprehensive test platform.

- Data failure throughout the ETL process.

The above objections can be overcome if you accurately and effectively perform ETL testing with fulfilling all its underlying test objectives. Some of the test objectives are as follows:

- Ensure that all the anticipated data is extracted and loaded to the data warehouse without any data loss.

- Check that the transformed data from the system source aligns with the expected values of quality standards.

- Ensure checking of the integrity constraints and association between the diverse data entities.

- As per the pre-defined rules like data types, validate the data against those.

- Verify the management of exceptional data scenarios like missing values.

- To identify any inconsistencies, compare the source and target data.

Why is ETL Testing Important?

The data is transferred in big sizes like terabytes and petabytes, even for small organizations. When you consider the data size for larger organizations like Facebook and YouTube, it is unimaginable to predict the size. In such a situation, manual testing of the data is not just challenging but impossible to execute.

With the ETL system, performing the ETL process and testing has eased the complete process. Implementing ETL testing allows for automation of the ETL process that help us apply logic and rule to filter out the data. With ETL testing, you can save time ensuring successful data transfers and work parallelly on different data sources. Hence, it has become a crucial component of data mining and warehousing.

Benefits of ETL Testing

The significance of ETL testing is based on its ability to ensure that extracted data from different sources are complete, accurate, and transformed in line with the business rule. Further, it also ensures that data is loaded accurately in the data warehouse. Here are some benefits of ETL testing:

- Intelligent business decisions: By verifying and validating business rules during ETL testing, accurate data is populated in reports. This, in turn, enables businesses to make informed and intelligent decisions based on reliable information.

- Timely availability of data: It ensures that accurate and up-to-date data is available on time. Access to historical and recent data per specific requirements allows for timely decision-making and analysis.

- Performance of data processing: It enables storing the data in the de-normalized forms with the efficient utilization of the star of snowflakes schema in granular form. This helps us ensure that large data processing can be done quickly.

- Importance of data security: During ETL testing, row-level security verification is conducted to confirm that only authorized users can access the required information and data. This improves data security by limiting access to sensitive data and safeguarding it from unauthorized access.

When should you perform ETL Testing?

Many professionals working in the ETL process and management often find it difficult to know about scenarios where ETL testing needs to be performed. Here are some scenarios where ETL testing is very important to be executed to ensure data integrity.

- When a new data warehouse has been established with data loaded, you must perform ETL testing to verify that data is successfully stored in the new environment.

- With the existing data warehouse, if any new data sources are introduced, you must check their integration. This is possible with ETL tests, which help ensure data is accurately synchronized with the pre-existing data in the data warehouse.

- When you are done migrating data from one system or database to another, you have to run ETL tests to ensure that the data migrated is reliable and consistent.

- In a situation when data is transferred or moved between the system, an ETL test must be done to ensure successful data transfer.

- ETL testing needs to be executed if there are concerns regarding data quality issues or performance problems in the ETL process.

- If there are suspected issues with data quality in source or target systems, ETL testing is done to ensure the quality of the data and any underlying errors.

Categories of ETL Testing

To the above-explained ETL process, its components, and its use, it is important to ensure the reliability of the process, which is accomplished by the ETL tests. It is divided into different categories for a complete ETL component testing process.

Data Warehouse Testing

Data warehouse testing is a way to check if the data stored in a data warehouse is reliable and accurate. It ensures that the data can be trusted for making important organizational decisions. The primary objective of data warehouse testing is to verify that the integrated data within the data warehouse is sufficiently dependable for informed decision-making by the company.

Migration Testing

During the migration testing, the old consumer database serves as the source database, and the included data is then transferred to the new location of the target databases. This is done to have better efficiency of the ETL testing. When do you need to perform migration testing? It is required when transferring data from the existing record to the new target database. You can perform migration testing manually, but it is preferred to utilize it to automate the ETL process for migrating the data.

Alter Application

In this ETL testing category, the new database is not used. Instead, we add new data extracted from the different databases and then transfer it to the same data warehouse. This indicates that we use the same data warehouse to include the additional data. In this process, the customers are required to introduce updated business rules and regulations for the adequate functioning and development of the data warehouse.

Report Testing

Reports are crucial in making business decisions as they are the basis for evaluating project outcomes. Reports provide a comprehensive showcase of the data warehouse results. Testing these reports involves thoroughly reviewing and validating the report's content, including its data and computations. The goal is to ensure the accuracy and reliability of the information presented in the reports so that informed business decisions can be made based on them.

Types of ETL Testing

Several types of ETL testing are performed during the ETL process, each with a specific function. Let us learn about those testing types:

- Production Validation Testing: It is also known as table balancing or production reconciliation. This testing type is done on data (basically in the data warehouse) to verify them in the production system and compare it against the source data. It helps to check for the data against faulty logic, failed loads, and operational processes that cannot get loaded into the system.

- Source to Target Count Testing: This testing type verifies the number of loaded recorded data into the target database with the expected number of recorded data.

- Source to Target Data Testing: It verifies that the transferred data into the target system does not have any loss or truncation of data and value of data adheres to the expectation after transformation.

- Application Upgrades: An application upgrade test is performed when the ETL application or associated infrastructure experiences changes and upgrades. It helps to ensure the smooth flow of the ETL process after the upgrades without any errors or data inconsistencies.

- Metadata Testing: The type of data which details the structure and relationship of the data is referred to as metadata. This type of test performs data type, length, index, and constraint checks of ETL application metadata. This test ensures that it adheres to the data and is used appropriately in the ETL process.

- Data Completeness Testing: This test is performed to verify that all the data extracted from the source system is successfully loaded into the target system or data warehouse. This test shouldn’t be avoided because it ensures no missing data or removal during the ETL process.

- Data Accuracy Testing: This test is executed to find that extracted from the source system is accurately loaded and transformed as per expectation in the data warehouse.

- Data Transformation Testing: This test focuses on the transformation logic and rules implemented to the data during the ETL process. It requires more than just writing a single SQL query and instead involves writing multiple SQL queries to have a robust comparison with the expected output from the target source. For this, it is essential to execute SQL queries for each row of data to verify transformation rules.

- Reporting Testing: It involves reviewing the data presented in a summary report and verifying that the layout and functionality of the report align with expectations. Additionally, calculations within the report are evaluated to ensure accuracy and correctness.

- Data Quality Testing: This test assesses the quality and accuracy of data loaded into the data warehouse. This is done to avoid any error due to the date and order number in the organization process. It involves syntax (invalid characters, pattern, case order) and reference tests (number, date, precision, null check) to check for data quality. This involves reporting dirty data based on invalid characters and checking data as per data models like customer ID.

- Incremental ETL Testing: This type of test is performed to verify incremental updates to the data, which are mainly executed in ETL runs. In simple terms, incremental ETL testing checks for the data integrity of old and new data in addition to new data. It checks for the processing of the inserts and updates as per expectation in the ETL process.

- GUI/Navigation Testing: This test is done to validate the front-end reports' navigation or GUI (Graphical User Interface) factors. It verifies the navigation aspects of the ETL applications and their related tools.

Responsibilities of an ETL Tester

The ETL testers have a crucial role and responsibility in maintaining the ETL process. They ensure extraction, transformation, and loading accuracy and reliability. Here are some defined roles of ETL testers:

- They are involved in writing the test cases as per the requirement that ensures complete accuracy of the data transfer.

- They plan for robust ETL processes and design and maintain test strategies.

- The ETL testers are responsible for executing the test cases using appropriate tools for ETL testing, like SQL, Selenium, and JUnit.

- They validate and verify the completeness of data extraction, transformation, and loading operations.

- While performing ETL testing, they identify and report any error or defect in the ETL process. They work in close association with the developers to fix the identified defects.

- ETL testers collaborate with project managers, business analysts, and other involved professionals in the ETL process. With this, they comprehend explicit requirements to ensure that the ETL process aligns with the requirements.

- ETL testers are active participants in reviewing and inspecting the ETL processes. Based on this, they give feedback on the quality and compliance of the ETL process.

- ETL tester maintains and updates the test documentation, like test plans, test cases, and bug reports.

- They have a crucial role in supporting the ETL process for continuous improvement. For example, they implement strategies for adopting new technology and best practices.

Note : Run your Selenium automation scripts across 3000+ environments. Try LambdaTest Today

How to Create ETL Test Case

Creating an ETL case is the prerequisite step to perform ETL testing. It involves verifying and analyzing the data, which are transformed from the source system to the target data warehouse. This test is implemented in various tools and databases in the information management industry. The main objective behind designing ETL test cases is to create SQL scripts to validate the flow of data and check for the accuracy of the ETL process.

Hence while creating ETL test cases, you should be familiar with the two essential documents, namely the ETL map document and database schema, to ensure that test cases include all the crucial information for effective testing. Let us learn about this in the below section.

- ETL Mapping Sheets: These sheets contain comprehensive information about the source and destination tables, including all columns and their corresponding look-up in reference tables. ETL testers should have proficiency in SQL queries, as ETL testing often involves writing complex queries with multiple joins to validate data at different stages of the ETL process. ETL mapping sheets are extremely helpful in constructing queries for data verification.

- Database Schema of the Source and Target: ETL testers should have access to the database schema of both the source and target systems. This schema provides detailed information about the structure, organization, and relationships of the database tables. It serves as a reference to verify any specific details mentioned in the ETL mapping sheets.

Here are the common steps to create test cases that ensure complete coverage of the ETL mapping scenarios and transformation rules:

- Critically evaluate the ETL mapping documents that outline the data extraction, transformation, and loading of data. This analysis will give you information about the test scenario, like data type conversion that requires testing.

- When identifying the test scenario, you must determine how data transformation, aggregation, or cleansing should be done.

- Write SQL scripts that show the expected transformation and data validations.

- Next, you have to ensure test cases contain all the information related to diverse steps of the ETL process. This is done by reviewing the mapping documents.

- After reviewing, you must verify the data flow and ensure that SQL scripts and transformations in the mapping document are correctly defined.

- You have to create the test cases that validate the data quality, like data completeness and reliability.

The steps mentioned above help you to design detailed test cases that include ETL mapping scenarios, defining transformational rules, designing SQL scripts, and verifying the mapping documents. Below are examples of test scenarios and cases that will clarify your concepts.

ETL Test Scenarios and Test Cases

Below are examples of ETL test scenarios and test cases pertaining to the ETL process and testing.

Test Scenario: Mapping Doc Validation

Test Cases:

- Verify if the mapping document contains the corresponding ETL information.

- Ensure that change logs are maintained in every mapping document.

Test Scenario: Validation

Test Cases:

- Validate the structure of the source and target tables against the mapping document.

- Check if the source and target data types are the same.

- Ensure that the length of data types in both source and target are equal.

- Verify that data field types and formats are specified.

- Confirm that the source data type length is not less than the target data type length.

- Validate the column names in the table against the mapping document.

Test Scenario: Constraint Validation

Test Cases: Ensure that the expected constraints are defined for specific tables.

Test Scenario: Data Consistency Issues

Test Cases:

- Check for variations in data types and lengths for attributes with the exact semantic definition.

- Verify the proper use of integrity constraints.

Test Scenario: Completeness Issues

Test Cases:

- Ensure all the expected data is loaded into the target table.

- Compare the record counts between the source and target.

- Check for any rejected records.

- Validate that data is not truncated in the target table columns.

- Perform boundary value analysis.

Test Scenario: Correctness Issues

Test Cases:

- Identify and validate misspelled or inaccurately recorded data.

- Check for null, non-unique, or out-of-range data.

Test Scenario: Transformation

Test Cases: Validate the correctness of data transformations.

Test Scenario: Data Quality

Test Cases:

- Perform number checks and validate the data.

- Check date formats and consistency across all records.

- Perform precision checks.

- Validate the data.

- Perform null checks.

Test Scenario: Null Validation

Test Cases: Verify the presence of null values where "Not Null" is specified for a specific column.

Test Scenario: Duplicate Check

Test Cases:

- Validate the uniqueness of key fields, primary keys, and any other columns as per the business requirements.

- Check for duplicate values in columns extracted from multiple sources combined into one column.

- Ensure no duplicates exist in combinations of multiple columns within the target table.

Test Scenario: Date Validation

Test Cases: Validate date values for various purposes in the ETL development, such as row creation date, identifying active records, and generating updates and inserts.

Test Scenario: Complete Data Validation

Test Cases:

- Validate the complete data set in the source and target tables using minus queries.

- Compare source minus target and target minus source.

- Identify mismatching rows from the minus query results.

- Match rows between source and target using the intersect statement.

- Ensure the count returned by intersecting matches the individual counts of the source and target tables.

- Detect duplicate rows if the minus query returns rows and the intersect count is less than the source or target table count.

Test Scenario: Data Cleanness

Test Cases:

- Delete unnecessary columns before loading into the staging area.

- By designing and executing these test scenarios and cases, you can thoroughly test the ETL process and ensure data accuracy and integrity.

ETL Testing Methodologies

We have learned that ETL testing is the most crucial part of the ETL process to ensure the accuracy of the data extracted, transformed, and loaded in the data warehouse. To accomplish the test, different methodologies are involved to test the ETL system thoroughly. Here are some of the common ETL testing methods with specific test scenarios.

- Unit testing: It focuses on verifying individual components or units of the ETL system, such as mappings and business rules, at an early stage of development.

- Verify the mapping of fields present in all tables.

- Verify the data type constraints of all fields.

- Validate the correctness of surrogate keys.

- Check error messages generated during data loading into the target table.

- Verify the data flow between the source, transformation, and target components using data profiling in ETL tools.

- Check if string columns are properly trimmed (left and right).

- Integration testing: It ensures that the end-to-end workflows or the entire ETL package functions correctly and produces the expected outcomes.

- Verify the data loading process from the feed to the staging layer, ODS (Operational Data Store), and data mart layers.

- Validate the initial and incremental data load methodologies.

- Verify that all target tables are loaded with the correct data.

- Monitor the execution time and performance of ETL packages.

- Validate job configurations and schedules.

- System testing: It focuses on validating that all transformation logic in the ETL system is implemented correctly according to the business requirements. It primarily emphasizes data validation.

- Ensure the number of records in source tables matches the number in target tables.

- Verify duplicate checks and handle NULL values appropriately.

- Validate that data is loaded in the expected format based on transformation rules.

- Verify the accuracy of aggregated measures in the DataMart tables.

Test Scenarios:

Test Scenarios:

Test Scenarios:

These methodologies and associated test scenarios help ensure the reliability and quality of the ETL process by detecting any data discrepancies, transformation errors, or performance issues. The execution of the different methodologies for ETL testing is automated to ensure the accuracy of the ETL process. Now we will learn about this from the below section.

Automation of ETL Testing

Automation testing is the best-used approach for the ETL process that uses software tools and technologies to automate the testing process. It makes the ETL testing more efficient and accurate. The traditional testing approach, like manual SQL scripting and visual data inspection, is more time-consuming and error-prone. However, if we compare the automation approach of ETL testing with the traditional testing approach, automation is the preferred approach for the following reasons:

- The automation approach helps in many test cases like edge cases. This led to improved test coverage.

- You can reduce manual efforts and testing time when ETL testing is automated.

- You can perform tests accurately and lower human errors, mainly encountered in manual testing.

- You can detect the defect in the ETL system at an early stage by running tests in repetition.

- The tools used to automate ETL testing can be integrated with different ETL platforms, data sources, and databases.

Subscribe to the LambdaTest YouTube channel to get software testing tutorials around Selenium testing, Playwright testing, and more.

ETL Test Tools

To perform automated ETL testing, you can utilize various automation testing tools that help to leverage the ETL test process. Some of the commonly used ETL testing tools are as follows:

- RightData: RightData (RDt) is a user-friendly ETL testing tool that aims to assist teams in automating the processes of testing data quality, ensuring data assurance, and performing data QC.

- iCEDQ: iCEDQ serves as a comprehensive data testing and monitoring platform. It allows organizations to automate ETL testing, cloud data migration testing, and data monitoring.

- Integrate.io: Integrate.io is a cloud-based data integration, ETL, and ELT tool. Its intuitive graphical interface simplifies data processing and enables the implementation of ETL, ELT, or replication solutions.

- DataQ: DataQ is an AI-powered ETL/ELT Testing tool, which also happens to be the first of its kind to support hybrid/multi-cloud environments. It excels in scaling up and handling both structured and semi-structured data.

- BiG EVAL: BiG EVAL presents a comprehensive suite of software tools designed to maximize the value of business data by continuously validating and monitoring its quality. It automates testing tasks during the ETL process and provides valuable quality statistics in production.

How To Select ETL Test Tools?

ETL testing tools are known to increase IT productivity and make the process of ETL testing easier by retrieving the information and data from Big Data to gain more insights. The use of ETL test tools encompasses the implementation of predefined procedures and rules for data extraction and processing, eliminating the need for traditional programming methods.

The test tools for ETL have built-in compatibility with cloud data warehouse, ERP, and CRM platforms like Oracle, Informatica, Google Cloud Platform, etc. The significance of ETL testing tools highlights the need to choose the right tools that best align with the organization's requirements. No matter whether you decide on open-source or commercial tools, here are certain features that should be looked up before selecting ETL testing tools:

- Graphical interface: The tools should have a user-friendly graphical interface that makes the design and development of the ETL process simpler.

- Automated generation of code: The tools must be able to generate the code automatically to increase the development process, save time, and lower human error.

- Built-in data connectors: It is important that the tools selected must access data stored in different file formats like databases, packaged applications, and legacy systems.

- Content management facilities: The tools should be equipped with content management facilities that help smooth context switching between ETL development, testing, and production environment.

- Sophisticated debugging tools: ETL tools must have debugging features that enable real-time data flow tracking and allows you to have comprehensive reports on row-by-row behavior. This, in turn, helps in efficient issue resolution and optimization.

Steps to perform ETL Testing

For creating and performing effective ETL tests, it is crucial to understand the ETL process, business requirements, and data sources. Here are the crucial steps for performing ETL testing:

- Gathering business requirements: The initial and crucial step in ETL testing involves understanding and capturing the business requirements. This includes designing data models, business flows, schematic lane diagrams, and reports. By comprehending the business requirements, testers gain awareness of what needs to be tested. It is essential for the team to thoroughly document the project's scope to ensure a comprehensive understanding by the testers.

- Identifying and validating data sources: The subsequent step is to identify the source data and perform preliminary checks such as schema verification, count validation, and table validation. These checks ensure that the ETL process aligns with the business model specifications. Additionally, primary keys are verified according to the model, and precautions are taken to avoid duplicate data, which can lead to inaccurate aggregation.

- Extracting data from source systems: You can start ETL testing by extracting data from the source systems. However, you must ensure that all data are extracted correctly and thoroughly.

- Creating test cases: Next, test cases are created, which involve source-to-target mapping, transformation design based on business requirements, and SQL scripts for comparing source and target data. Furthermore, the created documents are validated against the business requirements to ensure alignment with the intended objectives.

- Executing test cases: This step involves executing the created test cases in the QA (Question-Answer) environment to identify any encountered bugs or defects during the testing process. QA analysts attempt to reproduce the defects and diligently log them with appropriate comments and screenshots. The ETL tests are conducted according to the defined business requirements.

- Generating reports: Once all defects are logged into the defect management system (typically Jira), they are assigned to the relevant stakeholders for resolution. Analysts ensure that they capture relevant screenshots, outline the steps to reproduce the test cases, and document the actual versus expected results for each test case.

- Retesting resolved bugs: After the developer fixes a bug, it undergoes retesting in the same environment to ensure complete resolution without any remaining traces. Additionally, regression testing is performed to ensure that fixing the previous bug has not introduced any new issues.

- Applying transformation logic: After fixing the bugs and closing the bug reports, you have to ensure that the data tested are correctly transformed in line with the schema and mapping documents to target the data warehouse.

Here, you have to check the data types and threshold limit and check whether the mapping of each column and table is accurate. You should also verify that the data flow is correct throughout the ETL process. For this, you must check for alignment of data elements, ensure data integrity, and validate for any data flow issues.

- Loading data into the target warehouse: When you are done with validating the transformation of data, you have to load the data to the target data warehouse. However, performing a record count check is important to ensure data consistency before and after you load the data. During the loading process, you should validate that any invalid or erroneous data is rejected and not included in the target data warehouse. You must also get it confirmed about the acceptance of default values according to the defined business rules.

- Creating summary reports: Reports are generated based on the recorded bugs and test cases and uploaded into the defect management system. These reports enable stakeholders to understand the identified bugs and the overall results of the testing process, ensuring the delivery threshold is maintained effectively.

In addition, the summary report should be verified for the layout, options, filters, and export functionality. Such a report will help the stakeholder to know about the critical details and results of the testing process.

- Closing the reports: The final step involves closing the reports upon completion of all tasks, accompanied by comprehensive comments and attachments of relevant files related to the test cases and business requirements.

Authentications Required in the ETL Testing

The following authentications should be checked while performing ETL testing to avoid errors or missout.

- Check that data extraction is done accurately so you do not miss any data.

- Confirm the success of the transformation phase.

- Ensure there is no cut-off while loading data in the target data warehouse.

- Check that the target destination data warehouse rejects all the invalid data.

- Ensure that all the duplicated data are eliminated.

- Ensure that testing reports are accurately generated with all the required information.

Now let us see the bugs generally identified during the ETL testing process.

Types of ETL Bugs

When performing ETL testing in the system, you may encounter different bugs that interfere with the data extraction and loading phase. Here is the list of possible bugs you may encounter:

- The attribute's data type and length may differ from the required mapping.

- Incorrect utilization of referential integrity constraints.

- Non-null fields are populated with NULL values.

- Data is missing from tables due to the absence of integrity constraints.

- Target tables do not contain accurate data after loading.

- Incorrect creation of views for data processing.

- Unexpected duplication of data during loading.

- Data does not adhere to specified business rules.

- A mismatch between column size and data type causes character truncation.

- Improper mapping of columns in the ETL process leads to incorrect data or NULL values in the target.

- Errors in executing transformation logic resulted in flawed data in the target table.

Choosing the Right ETL Test Approach

As we have seen in the previous section on the ETL testing process, it involves various stages and different types of tests and the use of the tool. Before starting the ETL test, you must determine what method and tools best fit your organization. For example, many organization utilizes SQL scripting to test the ETL process.

This approach may save a lot of your time and underlying cost; however, it may take longer compared to using existing data integration solutions that support ETL testing. Therefore, it is advised to work with all the pertinent stakeholders before choosing the ETL test approach and get an idea of how to proceed.

You can discuss the following parameters for choosing the right ETL test approach:

- Data volume

- Complexity of transformation

- Performance requirements

- Data quality

- Error handling and logging

- Scalability and flexibility

- Automation and reusability

Difference between ETL and Database Testing

| Aspect | ETL Testing | Database Testing |

|---|---|---|

| Focus | Data movement from multiple sources to data warehouse | Testing the database rules and constraints of an application's database. |

| Data Verification | Verify data count in source and target databases | Verify every column in the table has valid data values |

| Purpose | Verify extraction, transformation, and loading of data | Verify application's CRUD operations and meeting business needs |

| Data Scope | Involves huge data, historical data, and multiple sources | Transactional data related to business operations |

| Processing | Online Analytical Processing (OLAP) | Online Transaction Processing (OLTP) |

| Data Structure | Not normalized, more indexes and aggregations, fewer joins | Normalized data with more joins |

| Testing Objectives | Data extraction, transformation, loading, and report verification | Application logic and data operation verification |

| Dimension | Multi-dimensional testing | Limited to business and application logic |

ETL Testing Challenges

When you perform an ETL test, you will encounter several challenges that will create hurdles in effective execution of data flow from source target to the data warehouse. Some common ETL testing challenges are as follows:

- Data transformation complexity: Transforming large datasets can be both time-consuming and intricate.

- Poor data quality: Data often contains errors and is messy. ETL testing requires clean and accurate data for reliable results.

- Resource intensiveness: ETL testing can burden resources, especially when dealing with complex and voluminous source systems.

- Data source changes: Changes in data sources can affect the completeness and accuracy of data quality.

- Complex processes: Integrating complex data and business processes can lead to complications during ETL tests.

- Slow performance: Processing delays or sluggish end-to-end performance caused by enormous data volumes can impact data accuracy and completeness.

- Finding skilled team members: It can be challenging to find individuals with expertise in ETL and data health.

Best Practices for ETL Testing

Some of the best practices for ETL testing are explained below, which will help you overcome the challenges mentioned earlier and optimize the ETL test process.

- Source data understanding and analysis: Ensure a thorough understanding of the data volume and types that will be processed. Familiarize yourself with data schema and other pertinent details to test the database's metadata effectively.

- Data flow: Comprehend the data flow model between the Feed-Stage-ODS-Datamart layers. Obtain detailed information about stored procedures or workflows for loading data across multiple layers. Gain insights into crucial tables, columns, and table relationships to facilitate efficient load testing of data.

- Load pattern: Grasp the data load pattern, including incremental and full load processes that operate daily. It is vital to validate the data loading process and ensure its accuracy.

- Business rules: Understand the transformation logic defined by the business and implement queries on feed tables to compare the source data with BI report data. This helps ensure that the data aligns with the expected business rules.

- Test data generation: To perform accurate testing of business rules and generate test data based on defined scenarios. This allows for thorough validation of the data against the expected outcomes.

- ETL performance: Execute ETL jobs using large volumes of data and monitor the processing time taken by the ETL package. Ensuring efficient performance is essential for timely data processing and delivery.

Conclusion

In this tutorial, we have explicitly explained ETL testing and its related concepts, which will help you gain insight into how to get started with this. ETL testing has a significant role in ensuring the accuracy and reliability of data in the ETL process. It has become crucial for organizations to identify potential issues that may affect data quality.

We have explored the various aspects of ETL testing, its objective, uses, needs, ETL process, testing methods, best practices, and challenges. The ETL testers should be familiar with the concepts explained to succeed in executing the test process.

With the advance in technology, data volume growth is witnessed, which demands effective ETL testing. Leveraging test automation, automation testing frameworks, and the Agile development approach will help you optimize the ETL test process.

On this page

- Overview

- Understanding ETL

- ETL Process

- Components of the ETL

- ETL vs. ELT: Key Differences

- What is ETL Testing

- Why ETL Testings

- Benefits

- When to perform

- Categories

- Types

- ETL Tester

- Create ETL Test Case

- Test Scenarios and Cases

- Testing Methodologies

- Automated ETL Testing

- Tools

- Select ETL Test Tools

- Steps to perform

- Authentications

- Types of ETL Bugs

- Choosing the Right ETL Test Approach

- Differences: ETL and Database Testing

- Challenges

- Best Practices

- Frequently Asked Questions (FAQs)

Frequently asked questions

- General

Author's Profile

Nazneen Ahmad

Nazneen Ahmad is an experienced technical writer with over five years of experience in the software development and testing field. As a freelancer, she has worked on various projects to create technical documentation, user manuals, training materials, and other SEO-optimized content in various domains, including IT, healthcare, finance, and education. You can also follow her on Twitter.

Reviewer's Profile

Shahzeb Hoda

Shahzeb currently holds the position of Senior Product Marketing Manager at LambdaTest and brings a wealth of experience spanning over a decade in Quality Engineering, Security, and E-Learning domains. Over the course of his 3-year tenure at LambdaTest, he actively contributes to the review process of blogs, learning hubs, and product updates. With a Master's degree (M.Tech) in Computer Science and a seasoned expert in the technology domain, he possesses extensive knowledge spanning diverse areas of web development and software testing, including automation testing, DevOps, continuous testing, and beyond.

Did you find this page helpful?

More Hubs

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!